Overcoming the Challenges of Bringing AI to the Edge

Introduction

Deep learning has developed rapidly over the past ten years with the introduction of open-source neural networks and the availability of large, annotated datasets both made possible by the efforts of academics and large technology platforms. The concept of a perceptron neural network was first described in the 1950s however it was not until recently that the adequate training data, neural network frameworks, and the requisite processing power came together to help launch the AI revolution. The Teledyne FLIR Infrared Camera OEM division focuses on extracting decision support from infrared and visible video cameras deployed in a wide range of applications including automotive safety, autonomy, defense, marine navigation, security, and industrial inspection. Through extensive and on-going development work, Teledyne FLIR offers multispectral imaging solutions optimized for size, weight, power, and cost (SWaP+C) by leveraging the most advanced vision processors, efficient neural networks, large image datasets, and synthetic image data.

Hardware Considerations

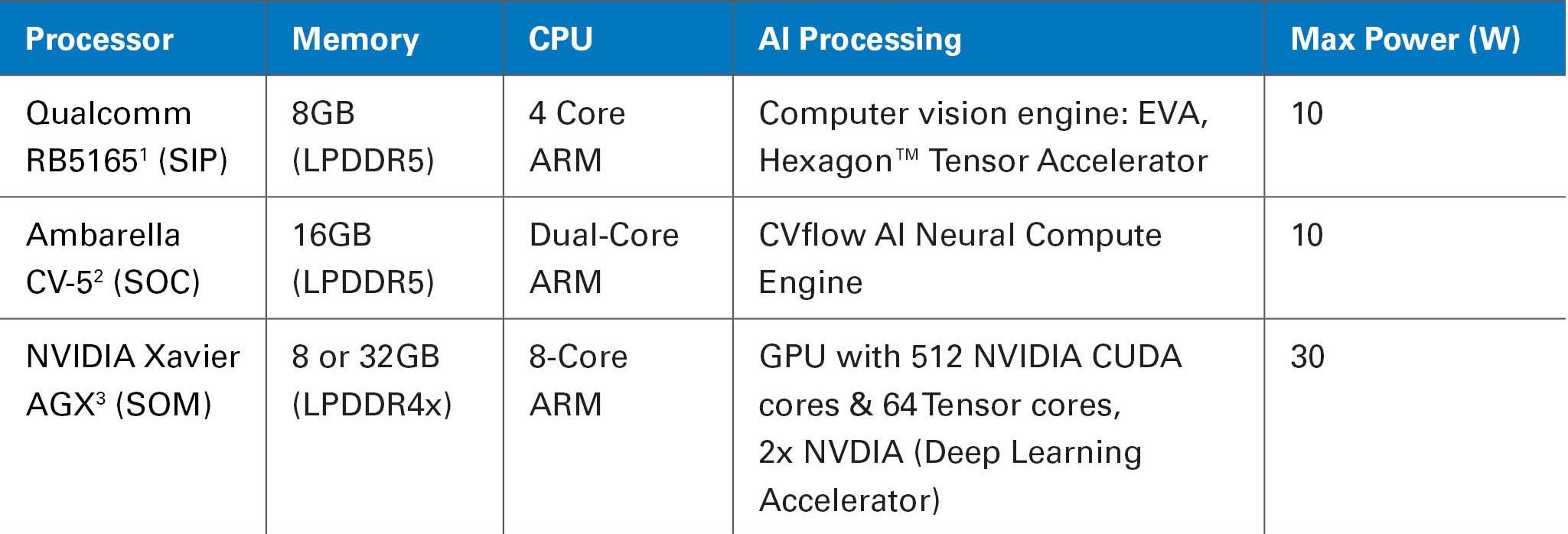

Developers make many decisions when designing cameras with onboard intelligence at the edge. The most impactful is the selection of the vision processor. Until recently this choice was basically limited to NVIDIA, due to their superior graphic processing technology which was leveraged for the highly parallel computational demands of neural networks. In addition, most of the open-source intellectual property (IP) for network training and runtime deployment was developed on and for the NVIDIA ecosystem. While NVIDIA continues to be a powerful platform, cost, size, and power consumption can potentially limit the viability of compact smart cameras. Over the past several years the leading vision processor suppliers including Qualcomm, Intel, Ambarella, Xilinx, Altera, MediaTek, and others have developed chip architectures that feature neural network cores or computational fabrics designed to process neural network computational loads at significantly lower power and cost.

A growing number of suppliers offer powerful vision processors; however it is often not feasible for smaller developers to source cutting-edge processors directly from the manufacturers who typically direct smaller volume customers to partner firms offering SOM (system on module) solutions and technical support. While this is a good option for some developers, it is often advantageous to work directly with the vision processor supplier as the integration of complex multi-threaded runtime routines requires close support from the vision processor suppliers.

Table 1. Example Compute Platforms

- https://www.qualcomm.com/media/documents/files/qualcomm-robotics-rb5-platform-product-brief.pdf

- https://3vpstm1hc6e52739x31131p2-wpengine.netdna-ssl.com/wp-content/uploads/Ambarella_CV5_Product_Brief_15JAN2021.pdf

- https://www.nvidia.com/en-us/autonomous-machines/embedded-systems/jetson-agx-xavier

There are several important considerations when evaluating AI compute platforms. The first is the effective number of ALUs (arithmetic logic units) available to perform AI workloads. It is common to utilize neural accelerators, GPUs, DSPs, and CPUs for portions of the workload. When making a hardware selection it is important to understand the strengths and weaknesses of each one, and to budget resources accordingly. The ability to run multiple software routines simultaneously is critical to automatic target recognition software. If there is not adequate processing power, the software must fit the various routines into the time slice available which results in dropped frames. The second important consideration is the type and amount of memory the processor can access. Sufficient and fast memory is important to achieve inference at high frame rates while running all the software routines like warp perspective, optical flow, object tracking and the object detectors.

Teledyne FLIR selected processors with integrated LPDDR5 memory with at least 8GB designed into several intelligent cameras including the new Triton™ security camera. The Teledyne FLIR AI stack software requires between 3 to 10 watts when running on Ambarella CV-2 or Qualcomm RB5165. Power consumption is managed by selecting networks and proposal routines to fit the power budget specified by the integrator. Object detection performance is impacted by these configurations, but performance gains continue to be made with more efficient neural networks and new node generation vision processor hardware.

Convolutional Neural Network Considerations and Performance

While there are an increased number of processor choices for running models at the edge, model training is typically done on NVIDIA hardware due to the very mature deep learning development environment built on and for NVIDIA GPUs. Neural network training is very computationally demanding and when training a model from scratch a developer can expect training times of up to 5 days on a high-end multi-GPU machine. Network training can be done on popular cloud service platforms however the compute costs on these platforms is expensive and the long data upload and download time is a consideration. To support development, Teledyne FLIR operates dedicated local servers for network training to manage schedule and costs.

The second decision a developer must make is to select the neural network architecture. In the context of computer vision, a neural network is typically defined by its input resolution, operation types, and configuration/number of layers. These factors all translate to the number of trainable parameters which have a high influence on the computational demand. Computational demands translate directly to power consumption and the thermal loads that must be accounted for during the design of products.

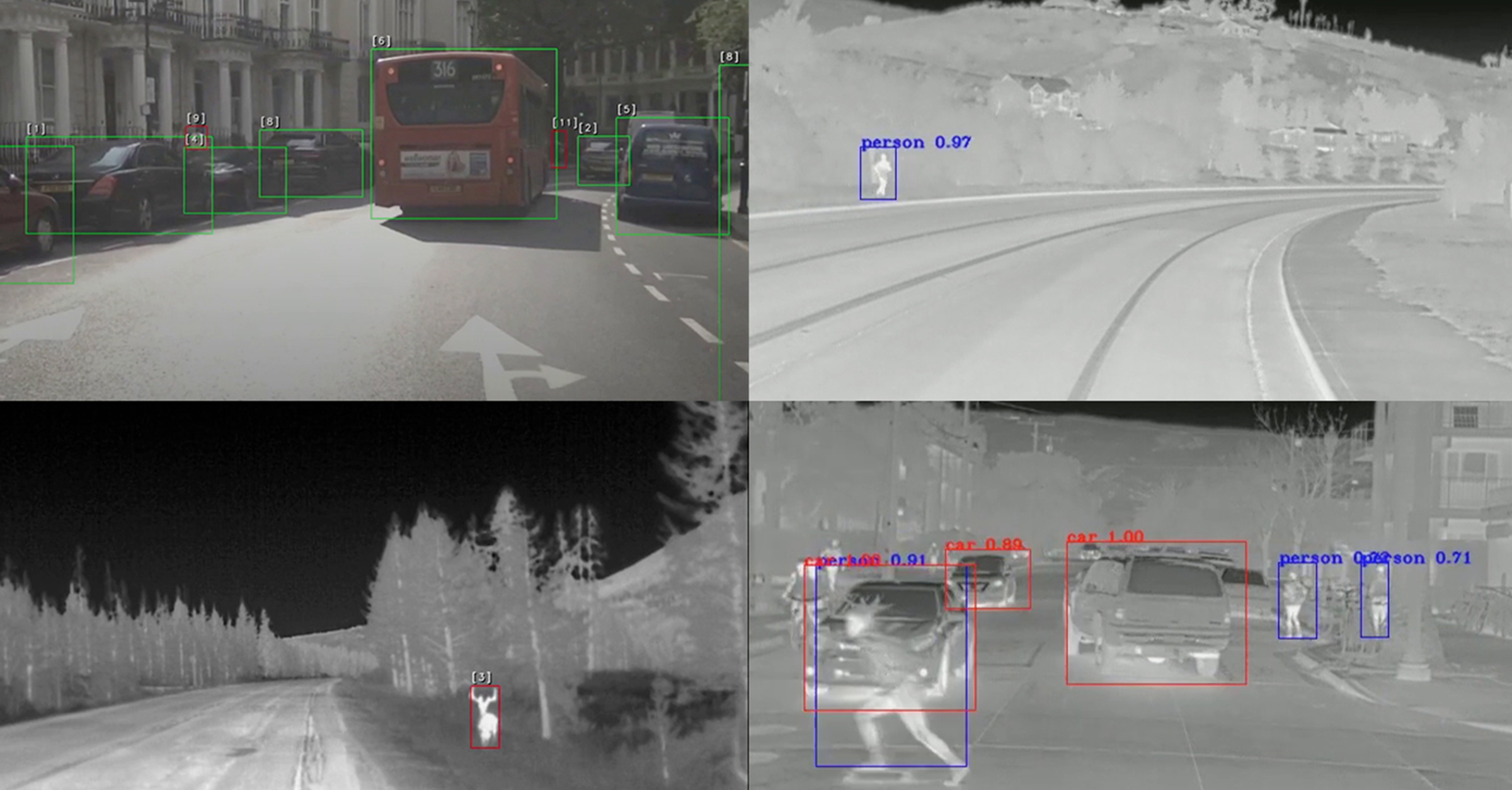

The trade space dictates tradeoffs between object detection accuracy and high frame rates for a given vision processor’s computational bandwidth. Video camera users typically demand fast and accurate object detection that enable both human and automatic response by motion control systems or alarms. A good example is Automatic Emergency Braking (AEB) for passenger vehicles, where a vision-based system can detect a pedestrian or other objects in milliseconds and initiate braking to stop the vehicle. Another example is a counter-drone system that must track objects of interest and provide feedback to motion control systems to direct counter measures to disable drones.

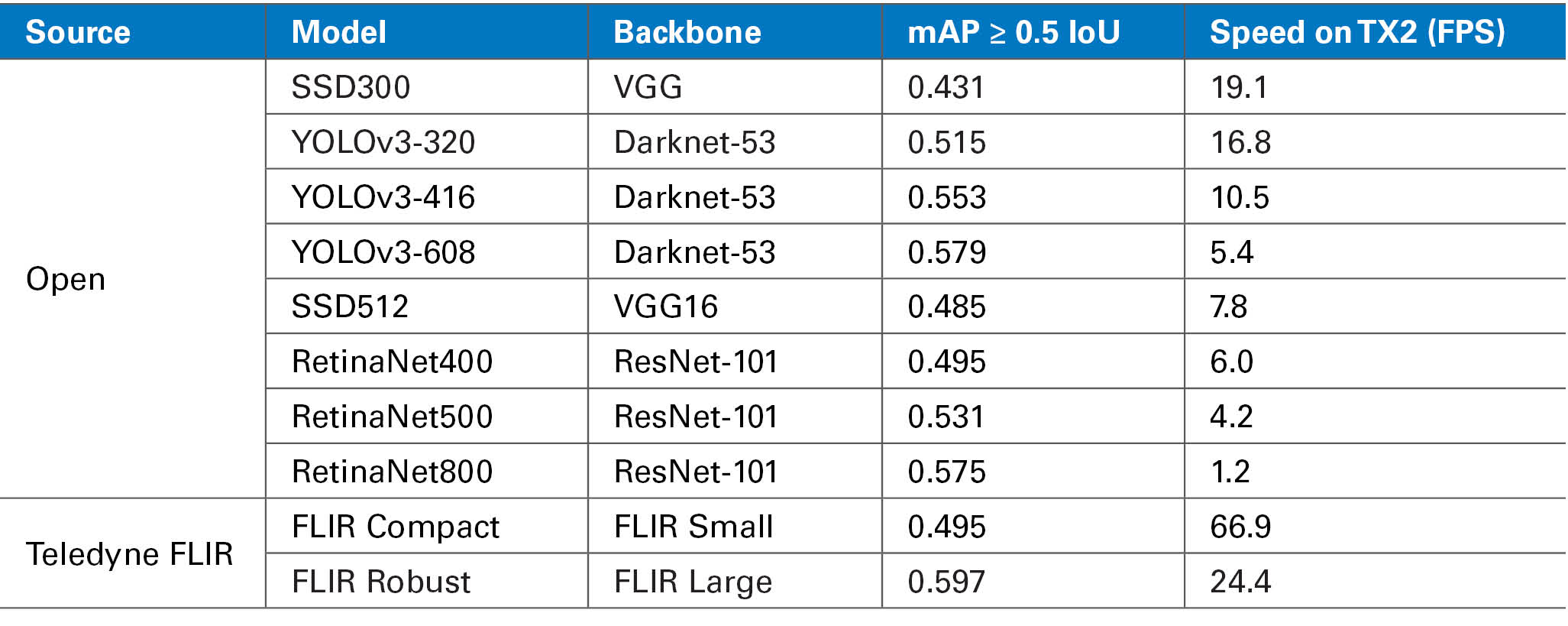

Table 2. Popular Open Source and Teledyne FLIR Neural Network Performance as Tested by Teledyne FLIR Running the COCO Test Dataset 2018 on a NVIDIA TX2.

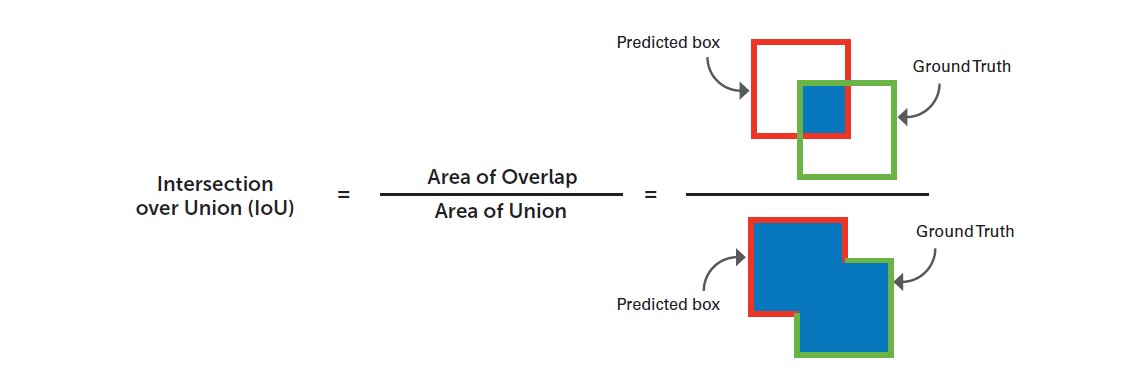

For comparison purposes, Table 2 includes several popular open source and Teledyne FLIR neural networks and their performance as tested by Teledyne FLIR running the COCO[1] test dataset on a NVIDIA TX2. Mean average precision (mAP) is the most common scoring metric in object detection. Intersection over union (IoU) is used to determine if an object detection is a "match" or a "miss" as shown in Figure 1. High matches, low misses, and low false positives correlate with higher mAP scores. Table 2 includes mAP scores of each model using an IoU value of greater than or equal to 0.5 and the resulting processing speed in frames per second (FPS).

Figure 1 - Illustration of IoU Performance Measure

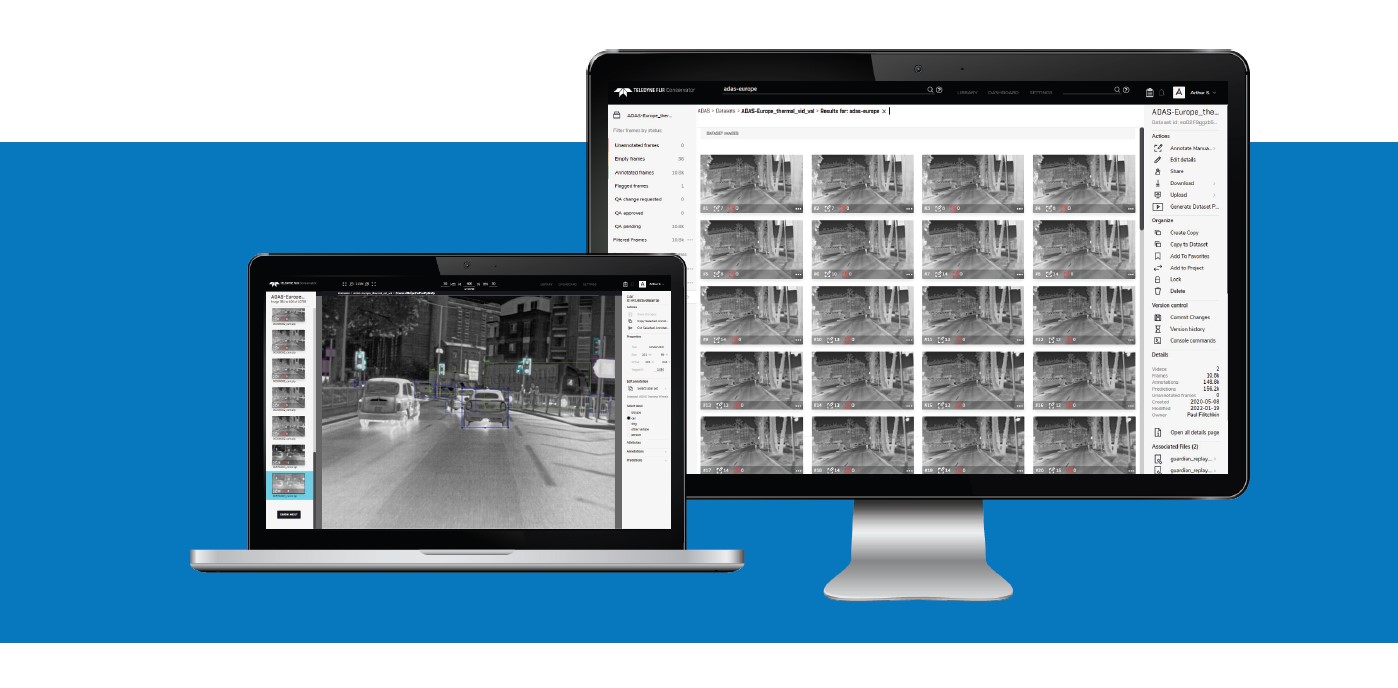

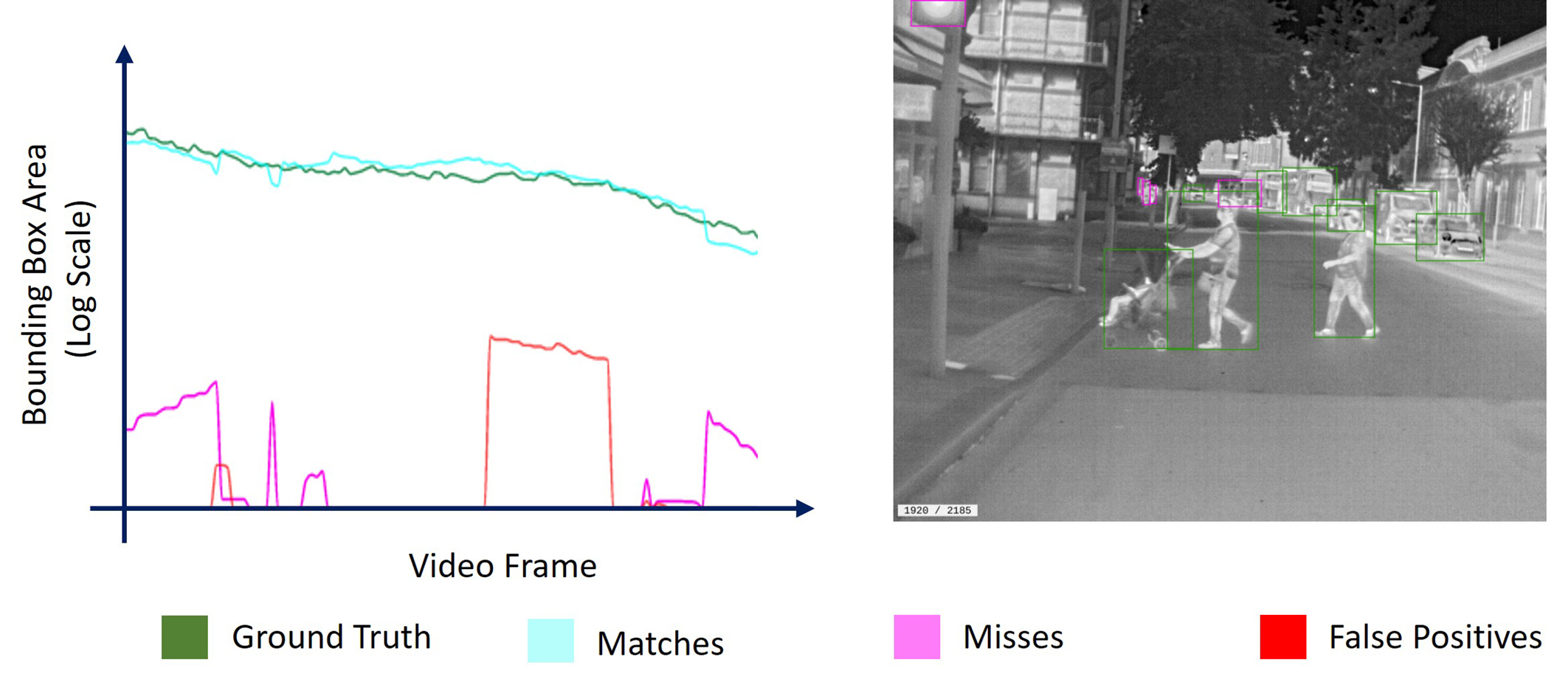

In addition to understanding the performance of models, data scientists need to analyze the cause of false positives and false negatives or “misses.” Teledyne FLIR developed Conservator™, a subscription-based dataset management cloud software that includes a local visual model performance tool capable of visualizing model performance. It can be used to interactively explore and identify areas where the model performs poorly, enabling the data scientist to investigate the specific training dataset images that cause the missed detections. Developers can quickly modify or augment the training data, retrain, retest, and iterate until the model converges on the performance required. Figure 2 includes an example output illustrating how the model performs at each point in the video sequence. Bounding box area from ground truth, object matches, object misses, and false positives are plotted side-by-side to help pinpoint any areas of concern.

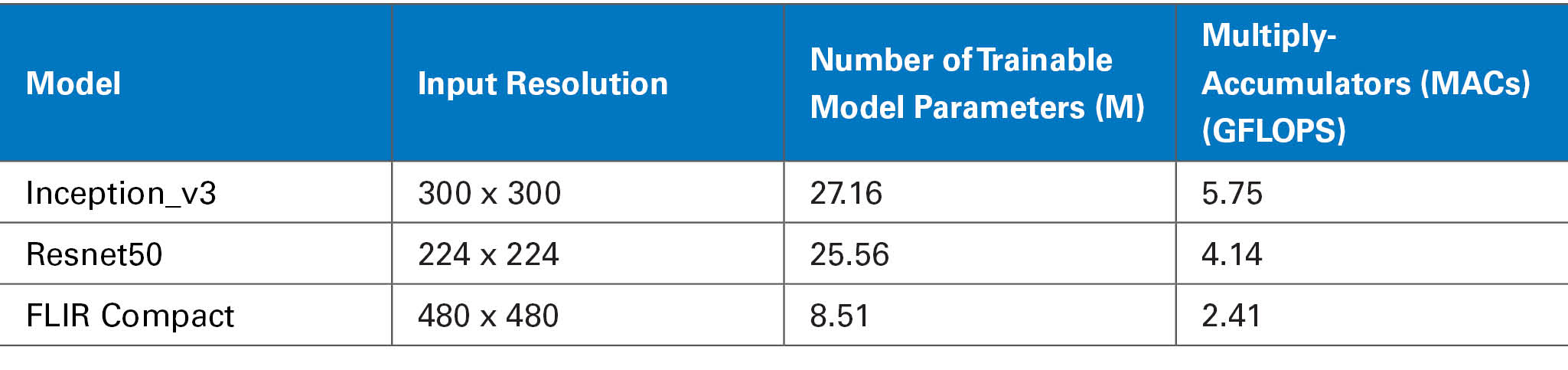

It is instructive to understand the number of calculations neural networks are performing. For video applications such as automotive safety systems, computations are performed on every video frame. It is critical to get rapid object detections to eliminate response delay. For other applications including counter UAS (C-UAS) or counter drone systems, quick detection and object location meta data is a critical input into a video tracker that controls the camera and counter measure pointing actuators. Table 3 includes neural network parameters, input resolution, and the associated processing demands for four example models for informational and comparison purposes. These estimations do not account for how well the architecture utilizes the specific hardware, so it is important to note that the most reliable way to benchmark a model is to run the model on the actual device.

Figure 2. Example Conservator Visual Model Performance Software Output and Associated Infrared Image

Table 3. Example Neural Network Processing Demands and Input Resolution

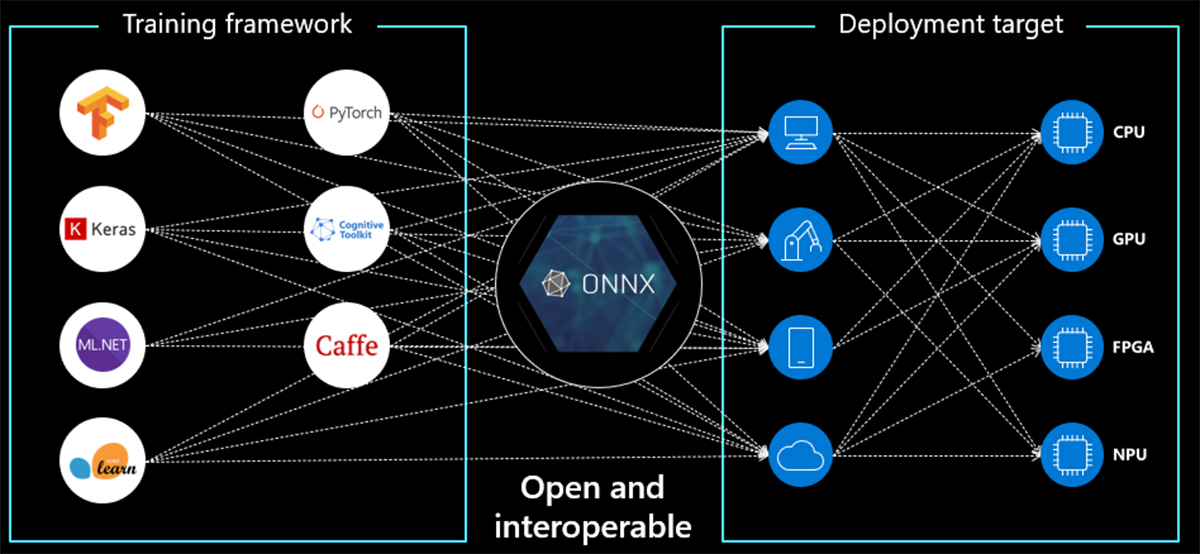

At the end of a training process, the model typically needs to be converted to run on the target vision processor’s specific execution fabric. The translation and fit process is extremely complex and requires a skilled software engineer. This has been a significant point of friction in faster deployment of AI in cameras. In response, an industry consortium established ONNX AI, an open-source project that established a model file format standard and tools to facilitate runtime on a wide range of processor targets. As ONNX becomes fully supported by the vision processor suppliers and the developer community, the efforts required to deploy models on different hardware will significantly reduce a pain point for developers.

Figure 3: ONNX AI – Image Source https://microsoft.github.io/ai-at-edge/docs/onnx/

The Teledyne FLIR AI stack uses a combination of computer vision technologies to manage computation demands while maximizing confidence in the target’s state. The framework houses a wide range of application-optimized networks and configurations that seamlessly combine routines including motion detection, stochastic search, fine-grain classification, multi-object tracking, and sensor fusion with external inputs such as a radar. The framework allows any combination of networks and routines to be selected at runtime using application-specific configurations.

Current models offer pixel inputs as large as 1024 x 768, which reduce the need to decimate an image to fit into a model when running inference on mega pixel cameras. This can translate directly to long range object detection performance by maximizing the number of pixels on target. In addition, this ensures maximum pixels input into fine-grain classifiers that can output object features such as a specific vehicle model or friend or foe detection.

Teledyne FLIR AI Stack Development

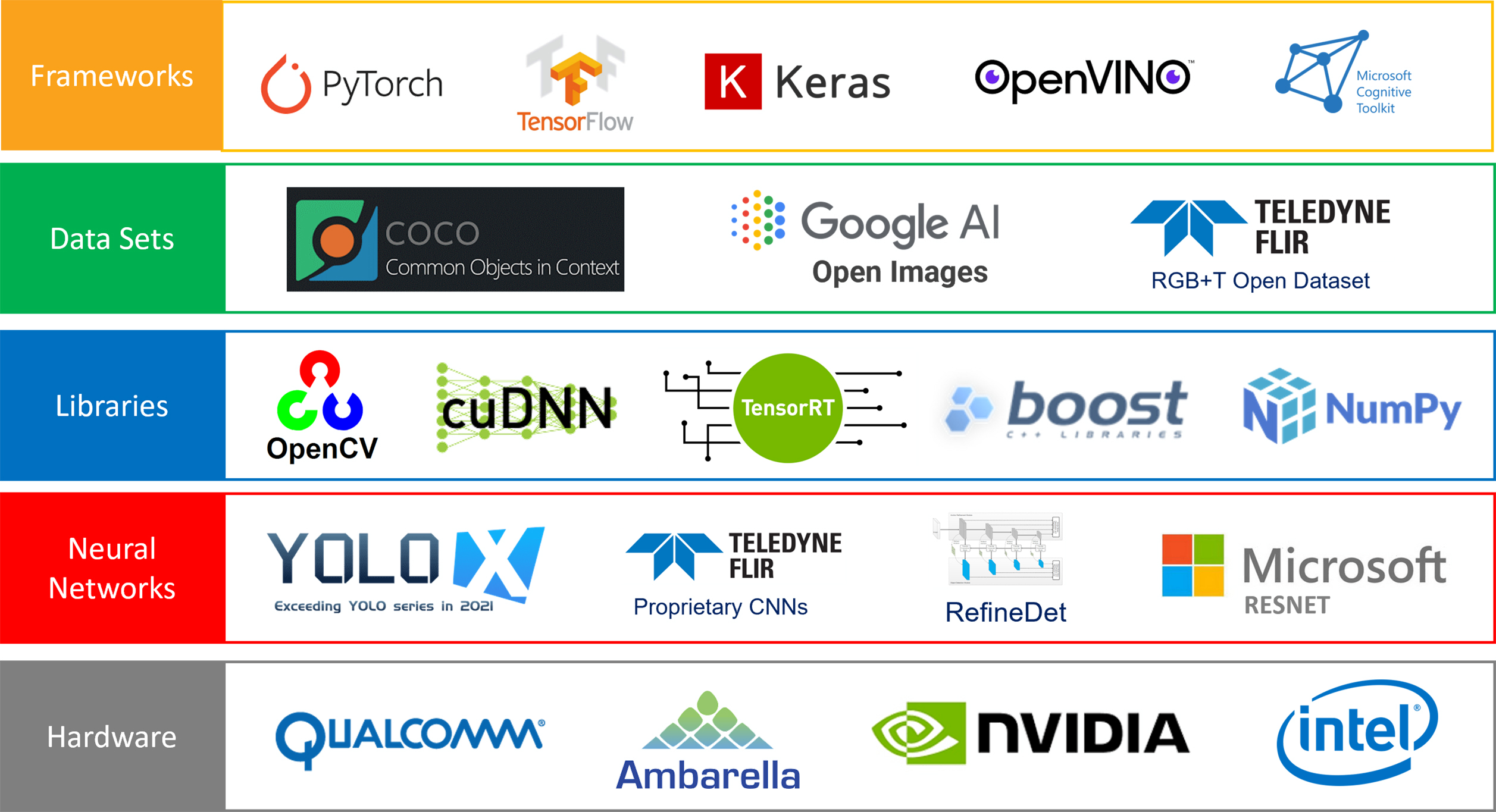

Figure 4 includes examples of the frameworks, datasets, libraries, neural networks, and hardware that make up the typical AI stack. Teledyne FLIR develops and manufactures longwave infrared (LWIR), midwave infrared (MWIR), and visible light cameras that can and are being developed to utilize AI at the edge. Given the requirements and lack of mature tools associated with multispectral sensing, unique software, datasets, and more have been developed to support AI at the edge using Teledyne FLIR sensors.

Teledyne FLIR uses the PyTorch framework, which is tightly integrated with Python, one of the most popular languages for data science and machine learning. PyTorch supports dynamic computational graphs allowing the network behavior to be changed programmatically at runtime. In addition, the data parallelism feature allows PyTorch to distribute computational work among multiple GPUs as well as multiple machines to decrease training time and improve accuracy.

Datasets for object detection are large collections of images that have been annotated and curated for class balance and characteristics such as contrast, focus, and perspective. It is industry best practice to manage a dataset like software source code and to utilize revision control to track changes. This ensures machine learning models maintain consistent and reproducible performance. If a developer encounters performance issues with a model, data scientists can quickly identify where to augment the dataset to create a continuous improvement lifecycle. Once a verified improvement has been made, the data change is recorded with a commit entry that can then be reviewed and audited. Teledyne FLIR Conservator, a subscription-based dataset management software, facilitates revision control on terabyte-scale data lakes along with strong data protection and access features to enable distributed teams. In addition, there are over 6.1 million multispectral images and over 25 million annotated objects (as of December 2021) available to subscribers.

While open-source datasets like COCO are available, they are visible light image collections containing common objects at close range captured from a ground level perspective. Teledyne FLIR is focused on applications that require multi-spectral images taken from air to ground, ground to air, across water and of unique objects including military objects. To facilitate the evaluation of thermal imaging by automotive safety and autonomy systems developers, Teledyne FLIR created an open-source dataset featuring over 26,000 matched thermal and visible frames. Because many users rely on Teledyne FLIR cameras to accurately classify objects at long distances, images of targets at various distances have been added to ensure models work well as small object detectors.

Figure 4. Representative AI Stack

In the real world, objects are viewed in near infinite combinations of distance, perspective, background environments, and weather conditions. The accuracy of machine learning models is largely dependent on how well training data represents field conditions. Teledyne FLIR developed a tool that analyzes a dataset’s imagery and quantifies the data distribution based on object label (% of images of person, car, bicycle, etc.), object size, contrast, sharpness, and brightness. The tool is then able to correlate model performance to data characteristics and produce a pdf-based datasheet for each new model release. This analysis is very important to data scientists and is valuable in the ongoing iteration of model development.

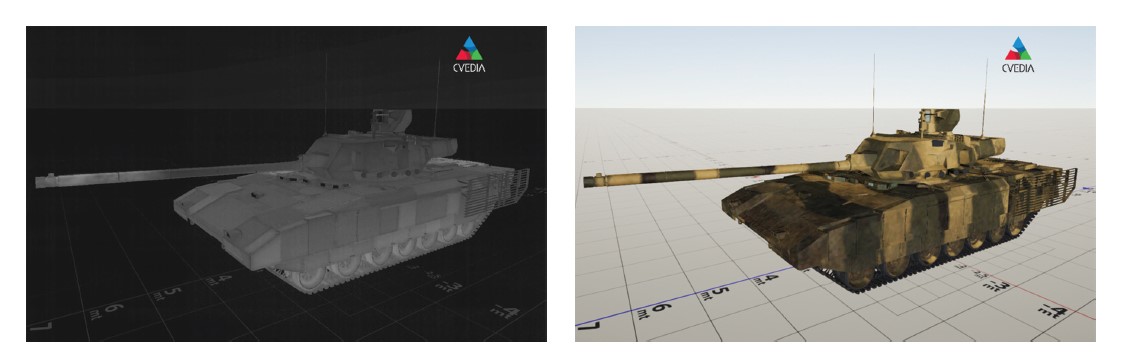

The time and expense to build large training datasets is significant and requires field data collection, curation of frames, annotation, and quality control over label accuracy. This is a bottleneck in deploying AI. Teledyne FLIR recognized training data as a critical component of the AI stack and in response, turned to the field of synthetic data. Teledyne FLIR works closely with CVEDIA, a synthetic data technology company, to develop the tools and IP necessary to create multispectral data and models using computer generated imagery (CGI). This powerful tool enables the creation of multispectral imagery of almost any object from any perspective and distance. The result is the ability to create datasets of unique objects like foreign military vehicles that would be extremely challenging to do relying on field data collection.

Figure 5: Synthetic Training Data in LWIR and Visible Light – Courtesy of CVEDIA

AI at the Edge in Production

There is a convergence of development and technology enabling a clearer path to deploy affordable and functional AI at the edge. Lower cost hardware is being released with improved processing performance that can be used with more efficient neural networks. Software tools and standards to simplify model creation and deployment are promising and ensure developers can add AI to their cameras with lower monetary investment. The open-source community and model standards from the ONNX community are contributing to have also aided in the acceleration of AI at the edge.

As integrators demand AI at the edge in industrial, automotive, defense, marine, security, and other markets, it is important to recognize the engineering effort required to move a proof-of-concept demonstration of AI at the edge to production. Developing training datasets, addressing performance gaps, updating training data and models, and integrating new processors requires a team with diverse skills. Imaging systems developers will need to carefully consider the investment required to build this capability internally or when selecting suppliers to support their AI stack.